What is Video Latency? The Definitive Guide for Broadcasters

Video latency is a major aspect of live streaming over the internet. It is a technical measure that affects the viewing experience of your stream.

There are several technical components that contribute to video latency, so the latency of your stream will depend on the strength of your streaming setup.

In this post, we’re going to answer all of your questions about video latency. We’ll start by discussing what video latency is in the context of live streaming before we take a look at some more technical aspects. We will determine what affects video latency and how to control the latency of your streams.

Table of Contents

- What is Video Latency?

- Why Does Latency Matter?

- What is an Average Video Latency?

- Recent Technological Developments

- AI Developments

- AI Integration in Streaming

- How to Measure Video Latency

- How to Reduce Latency While Streaming

- How to Reduce Latency at the Encoder

- Related Streaming Metrics

- Practical Tips for Latency Reduction

- Case Studies and Real-world Applications

- Video Latency on Dacast

- FAQs

- Conclusion

What is Video Latency?

Video latency is the measure of time it takes for a video signal to travel from the source that is capturing the video to the user-facing video player. Latency depends on several technical factors, so it varies depending on the website streaming setup you have.

This is not to be confused with “delay,” which is the intentional lapse in time between the capture and airing of a video. This is used to sync up streaming sources and give producers time to implement cinematographic elements.

Video latency is less intentional and more of a byproduct of the stream’s technical setup. The only thing you can do is try to reduce it as much as possible while maintaining the stream’s quality to give your viewers a real-time viewing experience.

Why Does Latency Matter?

Latency is very important for broadcasters because, in many cases, it affects the user experience. This is especially true for live streaming events that benefit from feeling lifelike.

Let’s say you are streaming a graduation ceremony. The moment that each student walks across the stage marks a major milestone for that student and their family. The loved ones who are watching the stream from home believe that they are experiencing that special moment in real time, or at least close to it. If your audience knows that your stream is running behind, the connection feels less real.

Many professionals in the streaming space argue that high latency is detrimental to the outcome of a stream since the more latency you have, the less lifelike your stream will be.

Latency is also an important consideration for video conferencing. Platforms like Zoom and Google Meets support real-time latency which allows people to have digital conversations to mimic face-to-face interactions. Without real-time latency, video chatting would not be possible.

But if you’re broadcasting an event where you want viewers to see what’s happening in real time, you have to try and reduce the latency as much as possible.

What Affects Video Latency?

Different parts of a live streaming setup contribute to latency. Everything from your internet network to your live streaming video host causes latency.

That said, let’s review some things that contribute to video latency.

Internet Connection

Fast and reliable internet is a must for live streaming. When your internet connection is not up to par, your video latency will increase. The speed, or throughput rate, of your internet network, will directly affect the latency of your stream.

The suggested internet speed is double the bandwidth you intend to use for your video stream, so higher-resolution videos need faster internet in order to keep the latency low.

You can test your internet speed by searching “internet speed test” on Google and clicking “Run Speed Test” on the first result.

We recommend using an Ethernet connection for the fastest, most reliable internet. WiFi and cellular data can be used in backup options, but Ethernet is the preferred choice. No matter which option you choose, you should run a speed test before your stream to make sure it is good to go.

One of the reasons for this is that Ethernet cables have much lower latency than WiFi can offer. This reduction in latency can have a great impact on your video’s overall latency.

Encoder Settings

With online streaming, a video needs to be encoded, transported, and decoded. This is where a large portion of latency is caused, so it is important that your encoder settings are properly set up and configured to streamline this process.

While there is not a magic combination of encoder settings that work for low latency streaming across the board, it is important to check with your chosen streaming video host to see what settings they require or recommend.

Dacast, for example, requires that broadcasters use an H.264 or x264 codec, a frame rate of 25 or 30, a 2-second frame rate interval, and progressive scanning. To get a better idea of the optimal encoder settings for reducing your video latency, we recommend checking out our complete guide to encoder setting configurations.

Video Protocols

Video protocols play a major role in latency. Different streaming protocols are capable of streaming with different amounts of latency.

Currently, the optimal protocol choice for low-latency streaming is HTTPS Live Streaming (HLS) for delivery and Real-Time Messaging Protocol (RTMP) for ingest. HLS is the most widely supported protocol for streaming since it is compatible with the HTML5 video player. However, the RTMP ingest aspect significantly lowers the latency.

HLS delivery with HLS ingest is possible, but it does not support low-latency streaming like the HLS delivery/RTMP ingest combination does.

Secure Reliable Transport (SRT) is an innovative video streaming protocol from Haivision. This protocol is known for secure, low-latency streaming. Unfortunately, SRT is relatively new, so most modern live streaming platforms and related technology are yet to get onboard with SRT streaming. However, once this protocol is more widely supported, it will reduce video latency across the board.

Another newer technology that was created to reduce video latency is WebRTC. WebRTC is an open-source streaming project that was designed to enable streaming with real-time latency. It was specifically designed with video conferencing in mind. This project is currently used by major video conferencing platforms, but it could help live video streaming platforms reduce their latency, as well.

Secure Reliable Transport (SRT)

Secure Reliable Transport (SRT) has become a widely adopted protocol in 2025, offering secure, low-latency streaming. Its main strength is its ability to provide stable video delivery even in challenging network conditions. Many modern live streaming platforms now support SRT, making it easier for broadcasters to ensure high-quality streams with minimal delays. SRT uses encryption to protect data during transmission, which enhances security while maintaining performance. This protocol is now an essential part of the broadcasting ecosystem, offering a reliable way to stream video content.

WebRTC

WebRTC, originally developed for video conferencing, has expanded its reach to live streaming, providing real-time latency for a variety of applications. It is particularly useful in scenarios where low latency is crucial, such as interactive streaming or live broadcasts. WebRTC allows for peer-to-peer communication, which helps minimize delays and improves the quality of video streams. Its versatility has made it an increasingly popular choice for broadcasters looking to provide real-time experiences to their audiences.

Emerging Protocols

The High Efficiency Streaming Protocol (HESP) is a new protocol that aims to reduce video latency to sub-seconds, providing broadcasters with a faster streaming experience. HESP focuses on achieving rapid channel switching and delivering near-instant live content. It stands out as a promising alternative to older protocols like HLS and DASH, which can experience higher latency due to their reliance on longer buffering times. As more broadcasters seek to enhance viewer experience, HESP offers a modern solution for faster, more responsive streaming.

What is an Average Video Latency?

The average latency for an online video stream is six seconds, which is considered low latency.

That said, here’s a breakdown of some common categories of latency.

| Type of Latency | Amount of Latency |

| Standard Broadcast Latency | 5-18 seconds |

| Low Latency | 1-5 seconds |

| Ultra-Low Latency | Less than 1 second |

| Real-Time Latency | Unperceivable to users |

Both traditional television and some OTT streaming fall into the standard broadcast latency category. Traditional television is often closer to 18 seconds, and OTT streaming. Some OTT streaming falls into the low latency category.

Real-time latency is used for video conferencing on communication platforms, such as Zoom, Facetime, Google Meets, and other video chat tools.

Recent Technological Developments

Advancements in Network Technologies

Recent developments in network technologies are helping reduce latency in live streaming, particularly with the introduction of the L4S (Low Latency, Low Loss, Scalable Throughput) standard by Comcast. This new standard aims to improve internet performance by minimizing latency and network congestion. With faster data transmission, it enhances the overall experience for activities like live streaming, making it smoother and more reliable for both broadcasters and viewers. Reducing end-to-end latency is crucial for real-time events, especially in sports broadcasting, where even a small delay can disrupt the experience.

AI-Powered Streaming Enhancements

Artificial intelligence is playing a key role in optimizing streaming performance. AI is now being used to enhance media delivery, such as in BytePlus MediaLive, which employs AI algorithms to ensure ultra-low latency streaming across various platforms. By analyzing data in real time, AI can adjust streaming quality and reduce glass-to-glass latency, ensuring a seamless experience for viewers. As AI technology advances, its ability to predict and resolve streaming latency causes will continue to improve, leading to better performance in live streaming environments.

AI Developments

1. AI-Driven Encoding and Compression

AI is making a significant impact on video encoding by optimizing compression algorithms. This reduces file sizes while maintaining high quality, which ultimately helps reduce latency during streaming. For example, BytePlus MediaLive uses AI to deliver ultra-low latency media experiences, improving streaming across various platforms like sports and gaming. These advancements are a major step forward in the constant effort to minimize buffering and latency, resulting in smoother viewing experiences.

2. AI in Content Management and Distribution

AI is also changing how content is managed and distributed. By optimizing how content is encoded, stored, and delivered, AI ensures high quality and low latency. It also automates production tasks, such as camera work and video editing, reducing the time and cost involved in content creation. The use of latency measurement tools helps broadcasters identify and minimize latency during content distribution, enhancing the overall user experience.

3. AI-Powered Personalization

AI enables broadcasters to offer personalized content delivery by analyzing viewer preferences and adjusting streams in real-time. Disney’s ESPN network is using AI to personalize shows like “SportsCenter,” tailoring content for younger, streaming-focused audiences. This personalized approach can improve the impact of latency on user experience by delivering content more effectively through adaptive bitrate streaming.

4. AI in Real-Time Analytics and Viewer Engagement

AI enhances viewer engagement by providing real-time analytics and interactive features. Amazon Prime Video’s integration of AI through “Prime Vision with Next Gen Stats” during NFL games allows for features like defensive alerts and prime targets, enriching the viewing experience. By optimizing latency during these real-time features, AI helps reduce buffering and keeps the action smooth.

5. AI for Automated Content Moderation

AI is also being used for real-time content moderation. By automatically filtering inappropriate content, AI helps ensure compliance with platform policies and improves the overall user experience. With automated systems in place, broadcasters can quickly address issues without introducing delays, improving latency optimization techniques across live streaming platforms.

AI Integration in Streaming

AI integration in streaming is helping broadcasters optimize video quality and reduce latency. By incorporating AI technologies, platforms can analyze and adjust video streams in real time, improving encoding and delivery. This enables smoother viewing experiences, especially in live broadcasts. With the continuous development of AI, streaming services are becoming more efficient, reducing buffering times and improving the overall quality of content.

Real-Time AI Processing

Advancements in GPU technology and edge computing are making real-time AI processing more feasible in the streaming world. These technologies allow AI models to analyze and process video data faster, right at the source, reducing delays. By optimizing video encoding and delivery, broadcasters can ensure that content reaches viewers with minimal latency, leading to a better, more seamless streaming experience.

How to Measure Video Latency

Since latency affects the viewers’ experience, it is important to know what your streaming setup is capable of.

Measuring your latency can be a bit difficult. The most accurate way to measure video latency is to add a timestamp to your video and have someone watch the live stream. Instruct them to report the exact time that the time-stamped frame appears on their screen. Subtract the time on the timestamp from the time that the viewer saw the frame, and that is your latency.

If you don’t know how to timestamp your stream, you can also have someone watch your stream and tell them to record when a specific cue comes through. Take the time when the cue was performed and subtract it from the time the cue was viewed, and that will give you the latency.

The second method is less accurate since there is more room for error, but it will give you a good enough idea of the latency of your setup.

How to Reduce Latency While Streaming

There are a few different ways that broadcasters can reduce latency. Since the latency is determined by several components, broadcasters must take a holistic approach to reduce the latency of their streams.

First, you’re going to want to make sure that you’re streaming with a fast internet connection. As we mentioned, it’s important to have a consistent internet speed of double the bandwidth you plan to use in your stream. It’s also important to stick to Ethernet cables as far as possible. Don’t opt for WiFi or data unless absolutely necessary.

As you work to reduce your latency, make sure that you’re not damaging the quality of your stream. Of course, there might be a bit of a tradeoff between quality and latency, but make sure that your encoder configurations will still produce a sharp video image.

How to Reduce Latency at the Encoder

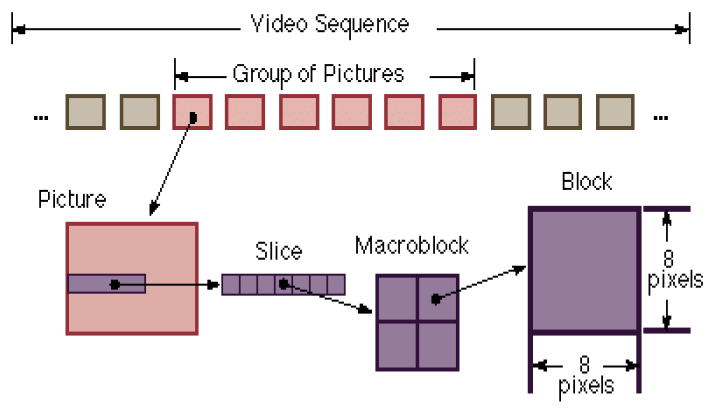

So how can latency be reduced at the encoder level? Video is compressed by using frame-based techniques or sub-frame techniques, usually “slices”. A video stream is made up of individual picture frames. A frame is an entire picture. See diagram 1.

A-frame is made up of multiple slices so if you can encode by the slice you can reduce the latency of the encoder since you don’t have to wait for the entire frame before transmitting the information.

These encoders have latencies less than a frame, some as low as 10 – 30 milliseconds. A frame-based encoder typically has a latency of around 100 – 200 milliseconds.

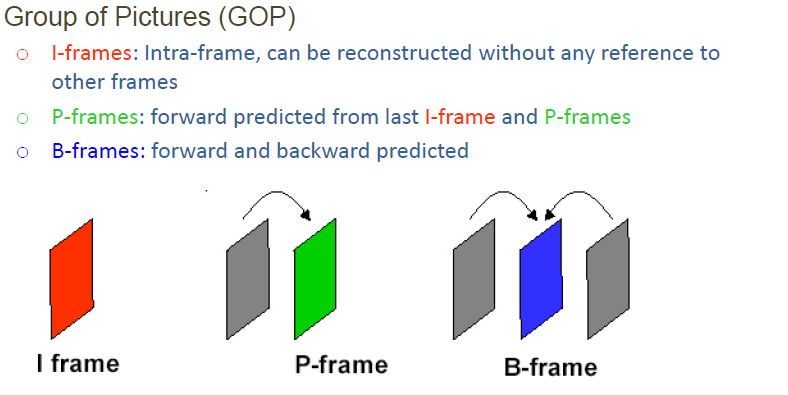

It is also possible to reduce the latency by removing Bi-directional frames. B frames have to wait for a future frame to compress the video. This increases the efficiency of the encoder but also adds to latency.

If you remove B frames then you have to increase the bit rate to achieve the same video quality level, a price many are willing to pay for reducing latency.

Related Streaming Metrics

Throughput and bandwidth are two technical components of live streaming that go hand in hand with latency. We’ve briefly touched upon each of these metrics throughout this post, but to understand their relationship on a more technical level, it’s best to compare them all side by side.

As a refresher, bandwidth is the measure of data transferred in your video stream, and throughput is the amount of data that is transferred in a specific amount of time.

When you are considering the relationship between latency, throughput, and bandwidth, you can picture cars traveling through a tunnel. Only so many cars can go through at a time without causing backed-up traffic.

In this scenario, bandwidth is the width of the tunnel, throughput is the number of cars traveling through, and latency is the amount of time it takes for the cars to get through. To reduce your latency, your streaming setup needs to have the appropriate power for the size of the file you are pushing through.

Practical Tips for Latency Reduction

Adaptive Bitrate Streaming (ABR)

Adaptive Bitrate Streaming (ABR) helps reduce latency by adjusting the video quality in real-time based on the viewer’s network conditions. If a user’s internet connection slows down, ABR automatically lowers the video quality to prevent buffering, ensuring a smoother experience. When the connection improves, ABR increases the quality again, maintaining both low latency and good video quality without interruptions.

Edge Computing

Edge computing brings content closer to the end-user by processing data at locations nearer to them. By reducing the distance data has to travel, it significantly lowers latency. This means faster delivery of content, resulting in a better streaming experience, especially in live broadcasts where every second counts. Edge computing is a key tool for broadcasters aiming to minimize delays and improve viewer satisfaction.

Case Studies and Real-world Applications

Several companies have implemented low-latency solutions to improve the quality of their broadcasts.

For example, Sky Sports introduced a ‘Live Sync’ button to reduce broadcast delays during live sports events. This feature allows viewers to experience real-time action, syncing the broadcast feed with the live event as closely as possible.

Another notable example is NBC Sports, which uses advanced compression technologies and dedicated fiber optic lines to reduce video latency during the Olympics, ensuring fans receive up-to-the-minute coverage. These industry-leading initiatives demonstrate how addressing video latency can enhance the viewing experience, especially in fast-paced events where timing is critical.

Video Latency on Dacast

As we covered, video latency has a lot to do with the video streaming host you use. Streams on Dacast have a latency of only 12 to 15 seconds, which is considered low-latency streaming.

This level of latency is achieved through the combination of HLS delivery and RTMP ingest. Dacast users can also use HLS ingest, but the latency is not as low with this approach.

Dacast also supports web conference streaming through a Zoom integration for even lower latency streaming.

Head over to our Knowledgebase to check out our dedicated guide to setting up a low latency streaming channel on Dacast.

FAQs

1. What is a good latency for video?

A good latency for video streaming is about six seconds. Anything lower than that is even better and tends to give the viewers a near-real-time viewing experience. That said, the standard broadcasting latency for videos ranges between 5 to 18 seconds. With Dacast, you can easily achieve such latency of 12 to 15 seconds.

2. How do I reduce video latency?

There are two major ways through which you can reduce the latency of your videos. Firstly, get your internet speed in order. Use a fast and reliable internet connection to ensure your stream is uploaded quickly and in the best possible quality.

Additionally, you can reduce your latency at the encoder level by speeding up the encoding process. At this stage, you can choose to retain your quality or sacrifice some quality for better latency—the choice is yours.

3. What causes video latency?

The time delay between getting a video file over from its origin to the viewers’ device is what causes latency. You have the video going through an encoder, then relay to the CDN, then relay from the CDN to the viewers’ device, and then decoding. This process takes up a significant chunk of time and causes latency in your stream.

4. How much video latency is noticeable?

Video latency gets noticed only when it becomes longer than 100ms. At this point, you can start noticing a lag between the actual event and the livestream. That said, if you’re performing, you might start noticing the latency as early as 10ms.

5. What is the latency of a video?

In simple terms, video latency is the time that the video takes to travel from its source (the camera) to the destination (the viewers’ device). This time is typically represented in milliseconds and can go up to 18 seconds for normal broadcasting. A lower latency is desirable for a near-real-time viewing experience.

Conclusion

As a broadcaster, it’s important to have a solid understanding of latency. Knowing how latency works and how you can control the latency of your streaming setup will give you more power over the outcome of your stream.

Are you looking for a low latency live streaming platform for professional streaming? Dacast might be the option for you. Our online video platform includes a variety of features that support streaming at the professional level, including an HTML5 all-device video player, advanced analytics, a video API, a white-label streaming platform, and RTMP ingest and playback, and 24/7 customer support.

You can try Dacast risk-free for 14 days with our free trial. Sign up today to start streaming. No credit card or binding contracts are required.

For regular live streaming tips and exclusive offers, you can join the Dacast LinkedIn group.

Please note that this post was originally written by Mike Galli, CEO of Niagara Video. It was revised in 2021 by Emily Krings to include the most up-to-date information. Emily is a strategic content writer and storyteller. She specializes in helping businesses create blog content that connects with their audience.

Stream

Stream Connect

Connect Manage

Manage Measure

Measure Events

Events Business

Business Organizations

Organizations Entertainment and Media

Entertainment and Media API

API Tools

Tools Learning Center

Learning Center Support

Support Support Articles

Support Articles